Over the past several years, cybersecurity risk management has become top of mind for boards. And rightly so. Given the onslaught of ransomware attacks and data breaches that organizations experienced in recent years, board members have increasingly realized how vulnerable they are.

This year, in particular, the public was directly impacted by ransomware attacks, from gasoline shortages, to meat supply, and even worse, hospitals and patients that rely on life-saving systems. The attacks reflected the continued expansion of cyber-physical systems — all of which present new challenges for organizations and opportunities for threat actors to exploit.

There should be a shared sense of urgency about staying on top of the battle against cyberattacks. Security columnist and Vice President and Ambassador-At-Large in Cylance’s Office of Security & Trust, John McClurg, in his latest Cyber Tactics column, explained it best: “It’s up to everyone in the cybersecurity community to ensure smart, strong defenses are in place in the coming year to protect against those threats.”

As you build your strategic planning, priorities and roadmap for the year ahead, security and risk experts offer the following cybersecurity predictions for 2022.

Prediction #1: Increased Scrutiny on Software Supply Chain Security, by John Hellickson, Cyber Executive Advisor, Coalfire

“As part of the executive order to improve the nation’s cybersecurity previously mentioned, one area of focus is the need to enhance software supply chain security. There are many aspects included that most would consider industry best practice of a robust DevSecOps program, but one area that will see increased scrutiny is providing the purchaser, the government in this example, a software bill of materials. This would be a complete list of all software components leveraged within the software solution, along with where it comes from. The expectation is that everything that is used within or can affect your software, such as open source, is understood, versions tracked, scrutinized for security issues and risks, assessed for vulnerabilities, and monitored, just as you do with any in-house developed code. This will impact organizations that both consume and those that deliver software services. Considering this can be very manual and time-consuming, we could expect that Third-Party Risk Management teams will likely play a key role in developing programs to track and assess software supply chain security, especially considering they are usually the front line team who also receives inbound security questionnaires from their business partners.”

Prediction #2: Security at the Edge Will Become Central, by Wendy Frank, Cyber 5G Leader, Deloitte

“As the Internet of Things (IoT) devices proliferate, it’s key to build security into the design of new connected devices themselves, as well as the artificial intelligence (AI) and machine learning (ML) running on them (e.g., tinyML). Taking a cyber-aware approach will also be crucial as some organizations begin using 5G bandwidth, which will drive up both the number of IoT devices in the world and attack surface sizes for IoT device users and producers, as well as the myriad networks to which they connect and supply chains through which they move.”

Prediction #3: Boards of Directors will Drive the Need to Elevate the Chief Information Security Officer (CISO) Role, by Hellickson

“In 2021, there was much more media awareness and senior executive awareness about the impacts of large cyberattacks and ransomware that brought many organizations to their knees. These high-profile attacks have elevated the cybersecurity conversations in the Board room across many different industries. This has reinforced the need for CISOs to be constantly on top of current threats while maintaining an agile but robust security strategy that also enables the business to achieve revenue and growth targets. With recent surveys, we are seeing a shift in CISO reporting structures moving up the chain, out from underneath the CIO or the infrastructure team, which has been commonplace for many years, now directly to the CEO. The ability to speak fluent threat & risk management applicable to the business is table stakes for any executive with cybersecurity & board reporting responsibilities. This elevated role will require a cybersecurity program strategy that extends beyond the standard industry frameworks and IT speak, and instead demonstrate how the cybersecurity program is threat aware while being aligned to each executive team’s business objectives that demonstrates positive business and cybersecurity outcomes. More CISOs will look for executive coaches and trusted business partners to help them overcome any weaknesses in this area.”

Prediction #4: Increase of Nation-State Attacks and Threats, by John Bambenek, Principal Threat Researcher at Netenrich

“Recent years have seen cyberattacks large and small conducted by state and non-state actors alike. State actors organize and fund these operations to achieve geopolitical objectives and seek to avoid attribution wherever possible. Non-state actors, however, often seek notoriety in addition to the typical monetary rewards. Both actors are part of a larger, more nebulous ecosystem of brokers that provides information, access, and financial channels for those willing to pay. Rising geopolitical tensions, increased access to cryptocurrencies and dark money, and general instability due to the pandemic will contribute to a continued rise in cyber threats in 2022 for nearly every industry. Top-down efforts, such as sanctions by the U.S. Treasury Department, may lead to arrests but will ultimately push these groups further underground and out of reach.”

And, Adversaries Outside of Russia Will Cause Problems

Recognizing that Russia is a safe harbor for ransomware attackers, Dmitri Alperovitch, Chairman, Silverado Policy Accelerator: “Adversaries in other countries, particularly North Korea, are watching this very closely. We are going to see an explosion of ransomware coming from DPRK and possibly Iran over the next 12 months.”

Ed Skoudis, President, SANS Technology Institute: “What’s concerning about this potential reality is that these other countries will have less practice at it, making it more likely that they will accidentally make mistakes. A little less experience, a little less finesse. I do think we are probably going to see — maybe accidentally or maybe on purpose — a significant ransomware attack that might bring down a federal government agency and its ability to execute its mission.”

Prediction #5: The Adoption of 5G Will Drive The Use Of Edge Computing Even Further, by Theresa Lanowitz, Head of Evangelism at AT&T Cybersecurity

“While in previous years, information security was the focus and CISOs were the norm, we’re moving to a new cybersecurity world. In this era, the role of the CISO expands to a CSO (Chief Security Officer) with the advent of 5G networks and edge computing.

The edge is in many locations — a smart city, a farm, a car, a home, an operating room, a wearable, or a medical device implanted in the body. We are seeing a new generation of computing with new networks, new architectures, new use cases, new applications/applets, and of course, new security requirements and risks.

While 5G adoption accelerated in 2021, in 2022, we will see 5G go from new technology to a business enabler. While the impact of 5G on new ecosystems, devices, applications, and use cases ranging from automatic mobile device charging to streaming, 5G will also benefit from the adoption of edge computing due to the convenience it brings. We’re moving away from the traditional information security approach to securing edge computing. With this shift to the edge, we will see more data from more devices, which will lead to the need for stronger data security.

Prediction #6: Continued Rise in Ransomware, by Lanowitz

“The year 2021 was the year the adversary refined their business model. With the shift to hybrid work, we have witnessed an increase in security vulnerabilities leading to unique attacks on networks and applications. In 2022, ransomware will continue to be a significant threat. Ransomware attacks are more understood and more real as a result of the attacks executed in 2021. Ransomware gangs have refined their business models through the use of Ransomware as a Service and are more aggressive in negotiations by doubling down with distributed denial-of-service (DDoS) attacks. The further convergence of IT and Operational Technology (OT) may cause more security issues and lead to a rise in ransomware attacks if proper cybersecurity hygiene isn’t followed.

While many employees are bringing their cyber skills and learnings from the workplace into their home environment, in 2022, we will see more cyber hygiene education. This awareness and education will help instill good habits and generate further awareness of what people should and shouldn’t click on, download, or explore.”

Prediction #6: How the Cyber Workforce Will Continue to be Revolutionized Among Ongoing Shortage of Employees, by Jon Check, Senior Director Of Cyber Protection Solutions at Raytheon Intelligence & Space

“Moving into 2022, the cybersecurity industry will continue to be impacted by an extreme shortage of employees. With that said, there will be unique advantages when facing the current so-called ‘Great Resignation’ that is affecting the entire workforce as a whole. As the industry continues to advocate for hiring individuals outside of the cyber industry, there is a growing number of individuals looking to leave their current jobs for new challenges and opportunities to expand their skills and potentially have the choice to work from anywhere. While these individuals will still need to be trained, there is extreme value in considering those who may not have the most perfect resume for the cyber jobs we’re hiring for, but may have a unique point of view on solving the next cyber challenge. This expansion will, of course, increase the importance of a positive work culture as such candidates will have a lot of choices of the direction they take within the cyber workforce — a workforce that is already competing against the same pool of talent. With that said, we will never be able to hire all the cyber people we need, so in 2022, there will be a heavier reliance on automation to help fulfill those positions that continue to remain vacant.”

Prediction #7: Expect Heightened Security around the 2022 Election Cycle, by Jadee Hanson CIO and CISO of Code42

“With multiple contentious and high-profile midterm elections coming up in 2022, cybersecurity will be a top priority for local and state governments. While security protections were in place to protect the 2020 election, publicized conversations surrounding the uncertainty of its security will facilitate heightened awareness around every aspect of voting next year.”

Prediction #8: A Shift to Zero Trust, by Brent Johnson, CISO at Bluefin

“As the office workspace model continues to shift to a more hybrid and full-time remote architecture, the traditional network design and implicit trust granted to users or devices based on network or system location are becoming a thing of the past. While the security industry had already begun its shift to the more secure zero-trust model (where anything and everything must be verified before connecting to systems and resources), the increased use of mobile devices, bring your own device (BYOD), and cloud service providers has accelerated this move. Enterprises can no longer rely on a specific device or location to grant access.

Encryption technology is obviously used as part of verifying identity within the zero-trust model, and another important aspect is to devalue sensitive information across an enterprise through tokenization or encryption. When sensitive data is devalued, it becomes essentially meaningless across all networks and devices. This is very helpful in limiting security practitioners’ area of concern and allows for designing specific micro-segmented areas where only verified and authorized users/resources may access the detokenized, or decrypted, values. As opposed to trying to track implicit trust relationships across networks, micro-segmented areas are much easier to lock down and enforce granular identity verification controls in line with the zero-trust model.”

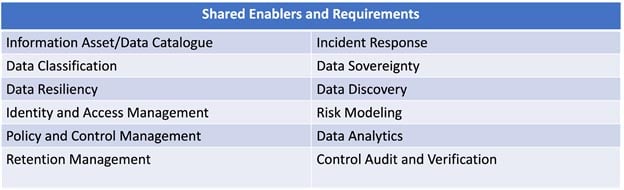

Prediction #9: Securing Data with Third-Party Vendors in Mind Will Be Critical, by Bindu Sundareason, Director at AT&T Cybersecurity

Attacks via third parties are increasing every year as reliance on third-party vendors continues to grow. Organizations must prioritize the assessment of top-tier vendors, evaluating their network access, security procedures, and interactions with the business. Unfortunately, many operational obstacles will make this assessment difficult, including a lack of resources, increased organizational costs, and insufficient processes. The lack of up-to-date risk visibility on current third-party ecosystems will lead to loss of productivity, monetary damages, and damage to brand reputation.”

Prediction #10: Increased Privacy Laws and Regulation, by Kevin Dunne, President of Pathlock

“In 2022, we will continue to see jurisdictions pass further privacy laws to catch up with the states like California, Colorado and Virginia, who have recently passed bills of their own. As companies look to navigate the sea of privacy regulations, there will be an increasing need to be able to provide a real-time, comprehensive view of what data is being processed and stored, who can access it, and most importantly, who has accessed it and when. As the number of distinct regulations continues to grow, the pressure on organizations to put in place automated, proactive data governance will increase.”

Prediction #11: Cryptocurrency to Get Regulated, by Joseph Carson, Chief Security Scientist and Advisory CISO at ThycoticCentrify

“Cryptocurrencies are surely here to stay and will continue to disrupt the financial industry, but they must evolve to become a stable method for transactions and accelerate adoption. Some countries have taken a stance that energy consumption is creating a negative impact and therefore facing decisions to either ban or regulate cryptocurrency mining. Meanwhile, several countries have seen cryptocurrencies as a way to differentiate their economies to become more competitive in the tech industry and persuade investment. In 2022, more countries will look at how they can embrace cryptocurrencies while also creating more stabilization, and increased regulation is only a matter of time. Stabilization will accelerate adoption, but the big question is how the value of cryptocurrencies will be measured. How many decimals will be the limit?”

Prediction #12: Application Security in Focus, by Michael Isbitski, Technical Evangelist at Salt Security

“According to the Salt Labs State of application programming interface (API) Security Report, Q3 2021, there was a 348% increase in API attacks in the first half of 2021 alone and that number is only set to go up.

With so much at stake, 2022 will witness a major push from nonsecurity and security teams towards the integration of security services and automation in the form of machine assistance to mitigate issues that arise from the rising threat landscape. The industry is beginning to understand that by taking a strategic approach to API security as opposed to a subcomponent of other security domains, organizations can more effectively align their technology, people, and security processes to harden their APIs against attacks. Organizations need to identify and determine their current level of API maturity and integrate processes for development, security, and operations in accordance; complete, comprehensive API security requires a strategic approach where all work in synergy.

To mitigate potential threats and system vulnerabilities, further industry-wide recognition of a comprehensive approach to API security is key. Next year, we anticipate that more organizations will see the need for and adopt solutions that offer a full life cycle approach to identifying and protecting APIs and the data they expose. This will require a significant change in mindset, moving away from the outdated practices of proxy-based web application firewalls (WAFs) or API gateways for runtime protection, as well as scanning code with tools that do not provide satisfactory coverage and leave business logic unaddressed. As we’ve already begun to witness, security teams will now focus on accounting for unique business logic in application source code as well as misconfigurations or misimplementations within their infrastructure that could lead to API vulnerabilities.

Implementing intelligent capabilities for behavior analysis and anomaly detection is also another way organizations can improve their API security posture in 2022. Anomaly detection is essential for satisfying increasingly strong API security requirements and defending against well-known, emerging and unknown threats. Implementing solutions that effectively utilize AI and ML can help organizations ensure visibility and monitoring capabilities into all the data and systems that APIs and API consumers touch. Such capabilities also help mitigate any manual mistakes that inadvertently create security gaps and could impact business uptime.”

Prediction #13: Disinformation on Social Media, by Jonathan Reiber, Senior Director of Cybersecurity Strategy and Policy at AttackIQ

“Over the last two years, pressure rose in Congress and the executive branch to regulate Section 230 and increased following the disclosures made by Frances Haugen, a former Facebook data scientist, who came forward with evidence of widespread deception related to Facebook’s management of hate speech and misinformation on its platform. Concurrent to those disclosures, in mid-November, the Aspen Institute’s Commission on Information Disorder published the findings of a major report, painting a picture of the United States as a country in a crisis of trust and truth, and highlighting the outsize role of social media companies in shaping public discourse. Building on Haugen’s testimony, the Aspen Institute report, and findings from the House of Representatives Select Committee investigating the January 6, 2021 attack on the U.S. Capitol, we should anticipate increasing regulatory pressure from Congress. Social media companies will likely continue to spend large sums of money on lobbying efforts to shape the legislative agenda to their advantage.”

Prediction #14: Ransomware To Impact Cyber Insurance, by Jason Rebholz, CISO at Corvus Insurance

“Ransomware is the defining force in cyber risk in 2021 and will likely continue to be in 2022. While ransomware has gained traction over the years, it jumped to the forefront of the news this year with high-profile attacks that impacted the day-to-day lives of millions of people. The increased visibility brought a positive shift in the security posture of businesses looking to avoid being the next news headline. We’re starting to see the proactive efforts of shoring up IT resilience and security defenses pay off, and my hope is that this positive trend will continue. When comparing Q3 2020 to Q3 2021, the ratio of ransoms demanded to ransoms paid is steadily declining, as payments shrank from 44% to 12%, respectively, due to improved backup processes and greater preparedness. Decreasing the need to pay a ransom to restore data is the first step in disrupting the cash machine that is ransomware. Although we cannot say for certain, in 2022, we can likely expect to see threat actors pivot their ransomware strategies. Attackers are nimble — and although they’ve had a ‘playbook’ over the past couple years, thanks to widespread crackdowns on their current strategies, we expect things to shift. We have already seen the opening moves from threat actors. In a shift from a single group managing the full attack life cycle, specialized groups have formed to gain access into companies who then sell that access to ransomware operators. As threat actors specialize in access into environments, it opens the opportunity for other extortion-based attacks such as data theft or account lockouts, all of which don’t require data encryption. The potential for these shifts will call for a great need in heavier investments in emerging tactics and trends to remove that volatility.”..[…] Read more »….

ROLE DESCRIPTION

We are looking for a Membership Manager to join the company and take on one of the most opportunistic roles the industry has to offer. This is a role that allows for you to create and develop relationships with leading solution providers in the enterprise technology space. Through extensive research and conversation you will learn the goals and priorities of IT & IT Security Executives and collaborate with companies that have the solutions they are looking for. This role requires professionalism, drive, desire to learn, enthusiasm, energy and positivity.

Role Requirements:

Role Responsibilities:

Apex offers our team:

Entry level salary with competitive Commission & Bonus opportunities

Apex offers the ability to make a strong impact on our products and growing portfolio.

Three months of hands on training and commitment to teach you the industry and develop invaluable sales and relationship skills.

Opportunity to grow into leadership role and build a team

Extra vacation day for your birthday when it falls on a weekday

All major American holidays off

10 paid vacation days after training period

5 paid sick days

Apply Now >>