Many DevOps teams are advancing to CI/CD, some more gracefully than others. Recognizing common pitfalls and following best practices helps.

Agile, DevOps and CI/CD have all been driven by the competitive need to deliver value faster to customers. Each advancement requires some changes to processes, tools, technology and culture, although not all teams approach the shift holistically. Some focus on tools hoping to drive process changes when process changes and goals should drive tool selection. More fundamentally, teams need to adopt an increasingly inclusive mindset that overcomes traditional organizational barriers and tech-related silos so the DevOps team can achieve an automated end-to-end CI/CD pipeline.

Most organizations begin with Agile and advance to DevOps. The next step is usually CI, followed by CD, but the journey doesn’t end there because bottlenecks such as testing and security eventually become obvious.

At benefits experience platform provider HealthJoy, the DevOps team sat between Dev and Ops, maintaining a separation between the two. The DevOps team accepted builds from developers in the form of Docker images via Docker Hub. They also automated downstream Ops tasks in the CI/CD pipeline, such as deploying the software builds in AWS.

Sajal Dam, HealthJoy

“Although it’s a good approach for adopting CI/CD, it misses the fact that the objective of a DevOps team is to break the barriers between Dev and Ops by collaborating with the rest of software engineering across the whole value stream of the CI/CD pipeline, not just automating Ops tasks,” said Sajal Dam, VP of engineering at HealthJoy.

Following are a few of the common challenges and advice for dealing with them.

People

People are naturally change resistant, but change is a constant when it comes to software development and delivery tools and processes.

“I’ve found the best path is to first work with a team that is excited about the change or new technology and who has the time and opportunity to redo their tooling,” said Eric Johnson, EVP of Engineering at DevOps platform provider GitLab. “Next, use their success [such as] lower cost, higher output, better quality, etc. as an example to convert the bulk of the remaining teams when it’s convenient for them to make a switch.”

Eric Johnson, GitLab

The most fundamental people-related issue is having a culture that enables CI/CD success.

“The success of CI/CD [at] HealthJoy depends on cultivating a culture where CI/CD is not just a collection of tools and technologies for DevOps engineers but a set of principles and practices that are fully embraced by everyone in engineering to continually improve delivery throughput and operational stability,” said HealthJoy’s Dam.

At HealthJoy, the integration of CI/CD throughout the SDLC requires the rest of engineering to closely collaborate with DevOps engineers to continually transform the build, testing, deployment and monitoring activities into a repeatable set of CI/CD process steps. For example, they’ve shifted quality controls left and automated the process using DevOps principles, practices and tools.

Component provider Infragistics changed its hiring approach. Specifically, instead of hiring experts in one area, the company now looks for people with skill sets that meld well with the team.

“All of a sudden, you’ve got HR involved and marketing involved because if we don’t include marketing in every aspect of software delivery, how are they going to know what to market?” said Jason Beres, SVP of developer tools at Infragistics. “In a DevOps team, you need a director, managers, product owners, team leads and team building where it may not have been before. We also have a budget to ensure we’re training people correctly and that people are moving ahead in their careers.”

Jason Beres, Infragistics

Effective leadership is important.

“[A]s the head of engineering, I need to play a key role in cultivating and nurturing the DevOps culture across the engineering team,” said HealthJoy’s Dam. “[O]ne of my key responsibilities is to coach and support people from all engineering divisions to continually benefit from DevOps principles and practices for an end-to-end, automated CI/CD pipeline.”

Processes

Processes should be refined as necessary, accelerated through automation and continuously monitored so they can be improved over time.

“When problems or errors arise and need to be sent back to the developer, it becomes difficult to troubleshoot because the code isn’t fresh in their mind. They have to stop working on their current project and go back to the previous code to troubleshoot,” said Gitlab’s Johnson. “In addition to wasting time and money, this is demoralizing for the developer who isn’t seeing the fruit of their labor.”

Johnson also said teams should start their transition by identifying bottlenecks and common failures in their pipelines. The easiest indicators to check pipeline inefficiencies are the runtimes of the jobs, stages and the total runtime of the pipeline itself. To avoid slowdowns or frequent failures, teams should look for problematic patterns with failed jobs.

At HealthJoy, the developers and architects have started explicitly identifying and planning for software design best practices that will continually increase the frequency, quality and security of deployments. To achieve that, engineering team members have started collaborating across the engineering divisions horizontally.

“One of the biggest barriers to changing processes outside of people and politics is the lack of tools that support modern processes,” said Stephen Magill, CEO of continuous assurance platform provider MuseDev. “To be most effective, teams need to address people, processes and technology together as part of their transformations.”

Technology

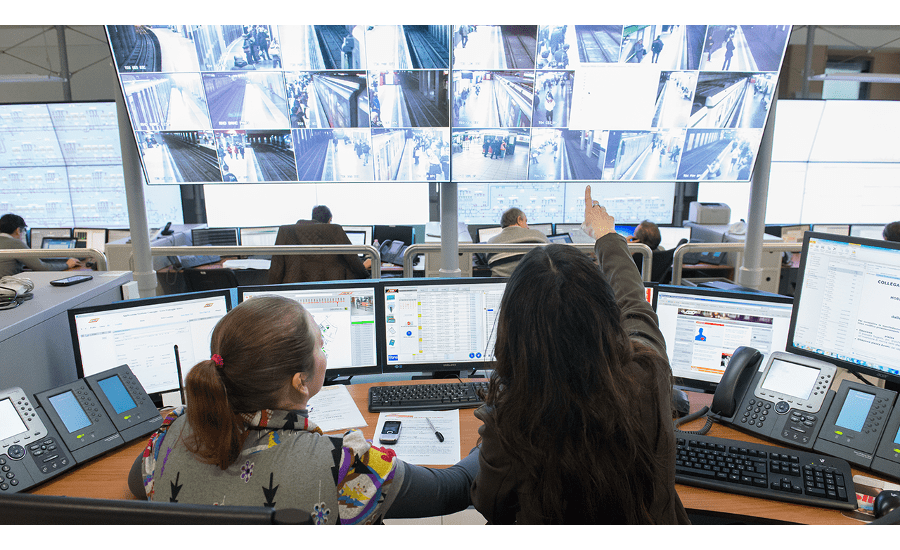

Different teams have different favorite tools that can serve as a barrier to a standardized pipeline which, unlike a patchwork of tools, can provide end-to-end visibility and ensure consistent processes throughout the SDLC with automation.

“Age and diversity of existing tools slow down migration to newer and more standardized technologies. For example, large organizations often have ancient SVN servers scattered about and integration tools are often cobbled together and fragile,” said MuseDev’s Magill. “Many third-party tools pre-date the DevOps movement and so are not easily integrated into a modern Agile development workflow.”

Integration is critical to the health and capabilities of the pipeline and necessary to achieve pipeline automation.

Stephen Magill, MuseDev

“The most important thing to automate, which is often overlooked, is automating and streamlining the process of getting results to developers without interrupting their workflow,” said MuseDev’s Magill. “For example, when static code analysis is automated, it usually runs in a manner that reports results to security teams or logs results in an issue tracker. Triaging these issues becomes a labor-intensive process and results become decoupled from the code change that introduced them.”

Instead, such results should be reported directly to developers as part of code review since developers can easily fix issues at that point in the development process. Moreover, they can do so without involving other parties, although Magill underscored the need for developers, QA, and security to mutually have input into which analysis tools are integrated into the development process.

GitLab’s Johnson said the upfront investment in automation should be a default decision and that the developer experience must be good enough for developers to rely on the automation.

“I’d advise adding things like unit tests, necessary integration tests, and sufficient monitoring to your ‘definition of done’ so no feature, service or application is launched without the fundamentals needed to drive efficient CI/CD,” said Johnson. “If you’re running a monorepo and/or microservices, you’re going to need some logic to determine what integration tests you need to run at the right times. You don’t want to spin up and run every integration test you have in unaffected services just because you changed one line of code.”

At Infragistics, the lack of a standard communication mechanism became an issue. About five years ago, the company had a mix of Yammer, Slack and AOL Instant Messenger.

“I don’t want silos. It took a good 12 months or more to get people weaned off those tools and on to one tool, but five years later everyone is using [Microsoft] Teams,” said Infragistics’ Beres. “When everyone is standardized on a tool like that the conversation is very fluid.”

HealthJoy encourages its engineers to stay on top of the latest software principles, technologies and practices for a CI/CD pipeline, which includes experimenting with new CI/CD tools. They’re also empowered to affect grassroots transformation through POCs and share knowledge of the CI/CD pipeline and advancements through collaborative experimentation, internal knowledge bases, and tech talks.

In fact, the architects, developers and QA team members have started collaborating across the engineering divisions to continually plan and improve the build, test, deploy, and monitoring activities as integral parts of product delivery. And the DevOps engineers have started collaborating in the SDLC and using tools and technologies that allows developers to deliver and support products without the barrier the company once had between developers and operations..[…] Read more »…..

ROLE DESCRIPTION

We are looking for a Membership Manager to join the company and take on one of the most opportunistic roles the industry has to offer. This is a role that allows for you to create and develop relationships with leading solution providers in the enterprise technology space. Through extensive research and conversation you will learn the goals and priorities of IT & IT Security Executives and collaborate with companies that have the solutions they are looking for. This role requires professionalism, drive, desire to learn, enthusiasm, energy and positivity.

Role Requirements:

Role Responsibilities:

Apex offers our team:

Entry level salary with competitive Commission & Bonus opportunities

Apex offers the ability to make a strong impact on our products and growing portfolio.

Three months of hands on training and commitment to teach you the industry and develop invaluable sales and relationship skills.

Opportunity to grow into leadership role and build a team

Extra vacation day for your birthday when it falls on a weekday

All major American holidays off

10 paid vacation days after training period

5 paid sick days

Apply Now >>